Introduction: Zero-Shot Learning in PyTorch

Contents

Introduction: Zero-Shot Learning in PyTorch#

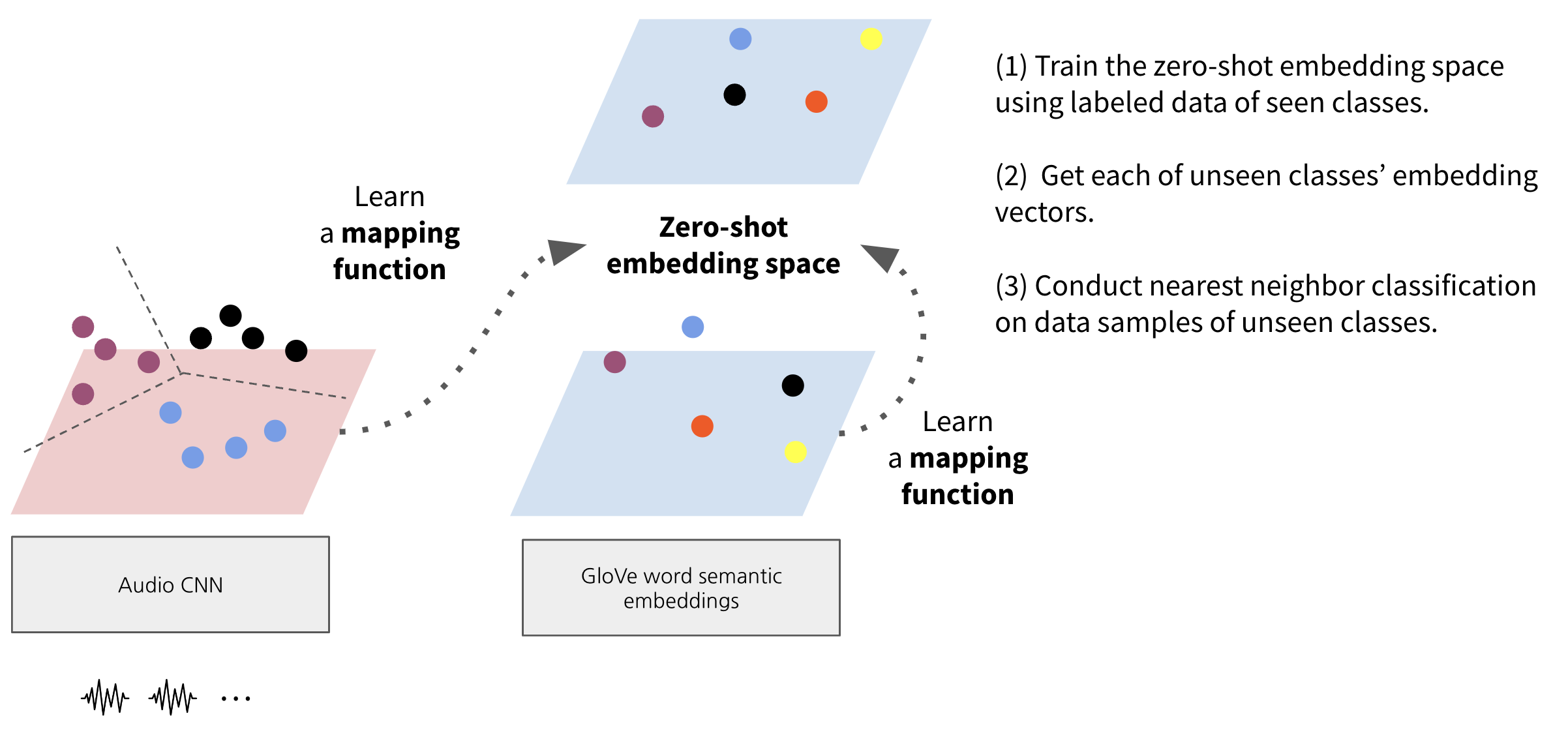

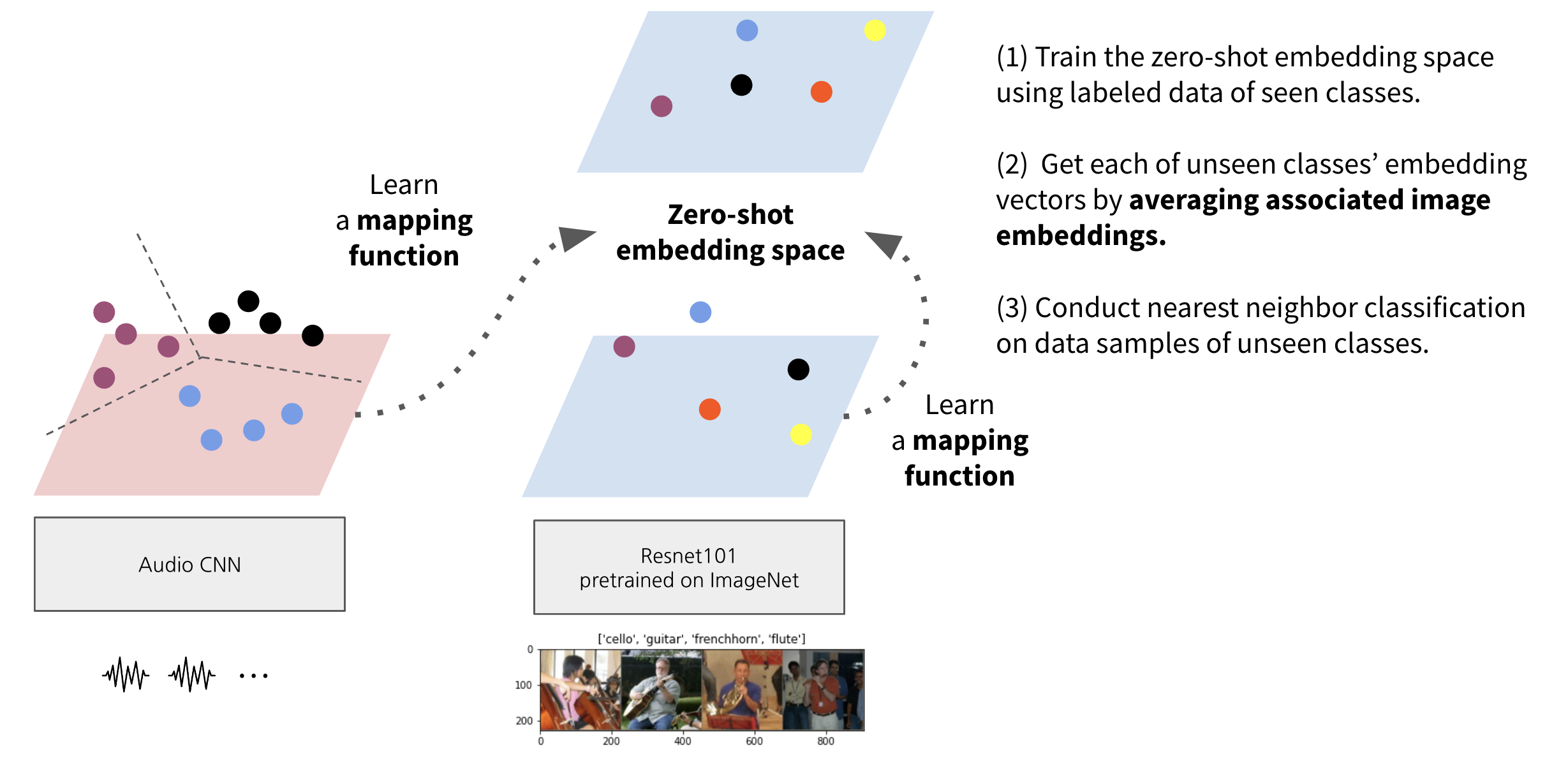

In this coding tutorial, we will train a zero-shot instrument classifier that can predict unseen classes by using two different types of side information.

The content will focus on experiencing the zero-shot classification procedure. Again, we’re using TinySOL dataset for the instrument audio data and labels for its small size and ease of accessibility.

Both experiments will be conducted based on a similar siamese network architecture which trains a common embedding space where the audio and the side information embeddings are projected to be further compared.

(1) GloVe word embeddings as side information#

In the first experiment, we’ll use GloVe word embeddings as the side information.

(2) Image feature embeddings as side information#

In the second experiment, we’ll use a pretrained image classfication model along with PPMI dataset as the side information. Being trained with a large image data corpus, the image classification model extracts general visual embeddings from the instrument images from PPMI dataset.

A repo with ready-to-go code for this tutorial can be found on github. Feel free to use it as a starting point for your own zero-shot experiment.

Requirements#

librosa

torch

numpy

torchaudio

torchvision

Pillow

sklearn

pandas

tqdm

jupyter

Table of Contents#

Here are the links to each section.

Data preparation: we’ll learn how to prepare splits for zero-shot experiments.

Models and data I/O: we’ll learn how to code the simese architecture, the training procedure, and the prediction procedure.

Training the Word-Audio ZSL model: we’ll check on the training code for the word-audio zero-shot model.

Evaluating the Word-Audio ZSL model: we’ll learn how to run the zero-shot evaluation and investigate the results of the word-audio zero-shot model.

Training Image-Audio ZSL model: we’ll check on the training code for the image-audio zero-shot model.

Evaluating the Image-Audio ZSL model: we’ll learn how to run the zero-shot evaluation and investigate the results of the image-audio zero-shot model.